Manage general content with data streams

Stack

Data streams are specifically designed for time series data. If you want to manage general content (data without timestamps) with data streams, you can set up ingest pipelines to transform and enrich your general content by adding a timestamp field at ingest time and get the benefits of time-based data management.

For example, search use cases such as knowledge base, website content, e-commerce, or product catalog search, might require you to frequently index general content (data without timestamps). As a result, your index can grow significantly over time, which might impact storage requirements, query performance, and cluster health. Following the steps in this procedure (including a timestamp field and moving to ILM-managed data streams) can help you rotate your indices in a simpler way, based on their size or lifecycle phase.

To roll over your general content from indices to a data stream, you:

Create an ingest pipeline to process your general content and add a

@timestampfield.Create a lifecycle policy that meets your requirements.

Create an index template that uses the created ingest pipeline and lifecycle policy.

Optional: If you have an existing, non-managed index and want to migrate your data to the data stream you created, reindex with a data stream.

Update your ingest endpoint to target the created data stream.

Optional: You can use the ILM explain API to get status information for your managed indices. For more information, refer to Check lifecycle progress.

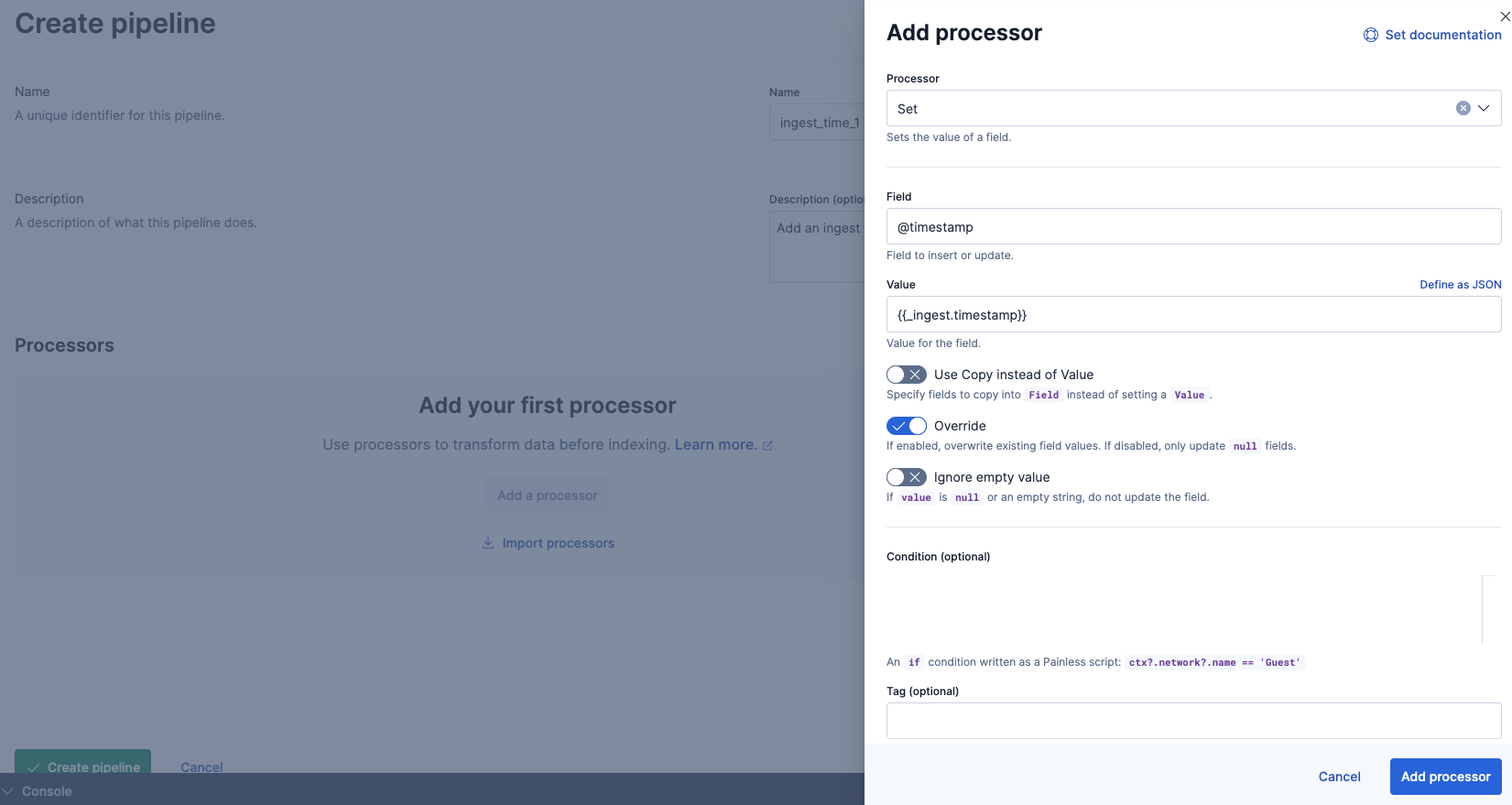

You can create an ingest pipeline that uses the set enrich processor to add a @timestamp field. Follow these steps in Kibana or using the create or update a pipeline API.

To add an ingest pipeline in Kibana, go to Stack Management > Ingest Pipelines, and then select Create pipeline > New pipeline.

Configure the pipeline with a name, description, and a Set processor that adds the @timestamp field with a value of {{_ingest.timestamp}}.

Use the API to add an ingest pipeline:

PUT _ingest/pipeline/ingest_time_1

{

"description": "Add an ingest timestamp",

"processors": [

{

"set": {

"field": "@timestamp",

"value": "{{_ingest.timestamp}}"

}

}]

}

A lifecycle policy specifies the phases in the index lifecycle and the actions to perform in each phase. A lifecycle can have up to five phases: hot, warm, cold, frozen, and delete.

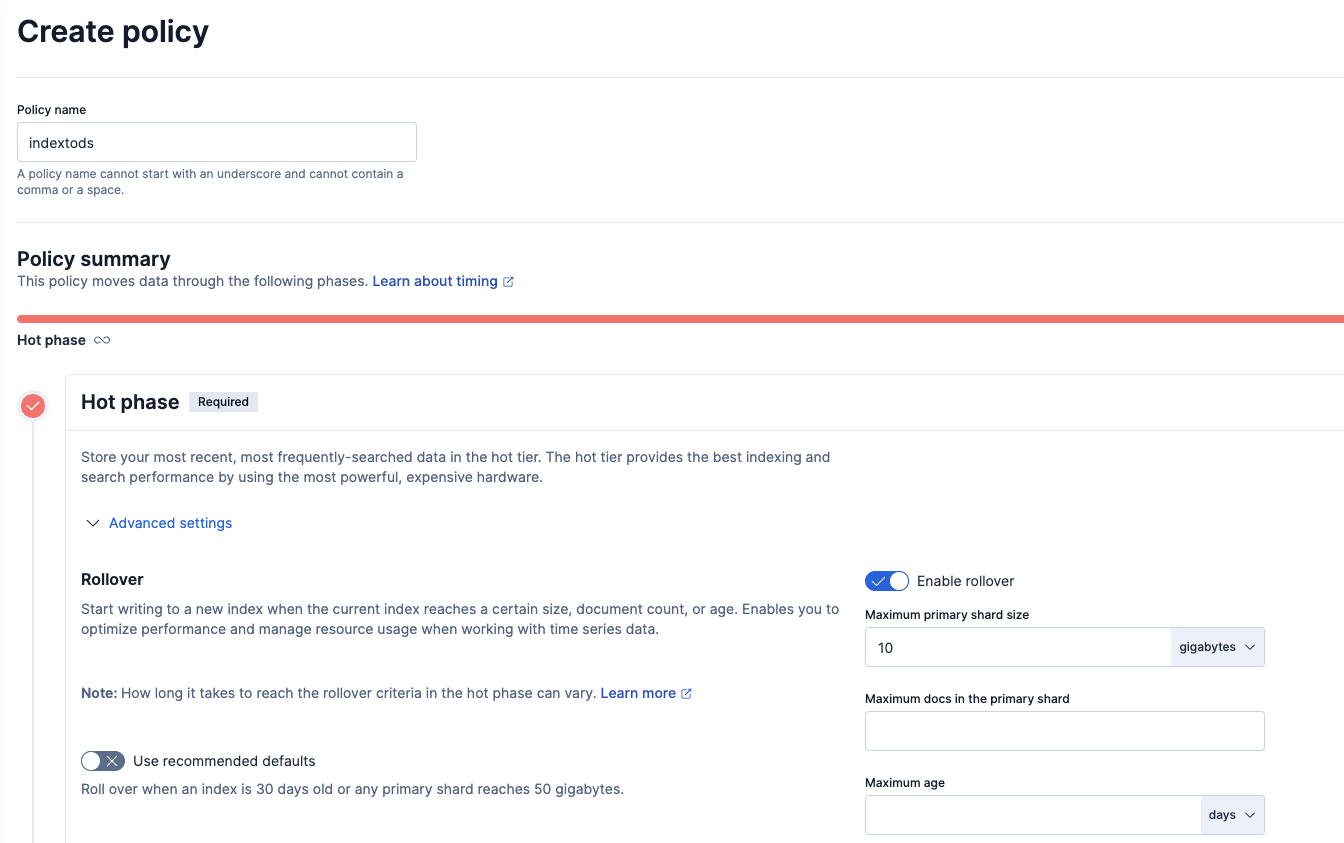

For example, you might define a policy named indextods that is configured to roll over when the shard size reaches 10 GB.

You can create the policy in Kibana or with the create or update policy API.

To create the policy in Kibana, open the menu and go to Stack Management > Index Lifecycle Policies. Click Create policy.

In the Hot phase, by default, an ILM-managed index rolls over when either:

- It reaches 30 days of age.

- One or more primary shards reach 50 GB in size.

Disable Use recommended defaults to adjust these values and roll over when the primary shard reaches 10GB.

Use the API to create a lifecyle policy:

PUT _ilm/policy/indextods

{

"policy": {

"phases": {

"hot": {

"min_age": "0ms",

"actions": {

"set_priority": {

"priority": 100

},

"rollover": {

"max_primary_shard_size": "10gb"

}

}

}

}

}

}

For more information about lifecycle phases and available actions, refer to Create a lifecycle policy.

To use the created lifecycle policy, you configure an index template that uses it. When creating the index template, specify the following details:

- the name of the lifecycle policy, which in our example is

indextods - the ingest pipeline that enriches the data by adding the

@timestampfield, which in our example isingest_time_1 - that the template is data stream enabled by including the

data_streamdefinition - the index pattern, which ensures that this template will be applied to matching indices and in our example is

movetods

You can create the template in Kibana or with the create or update index template API.

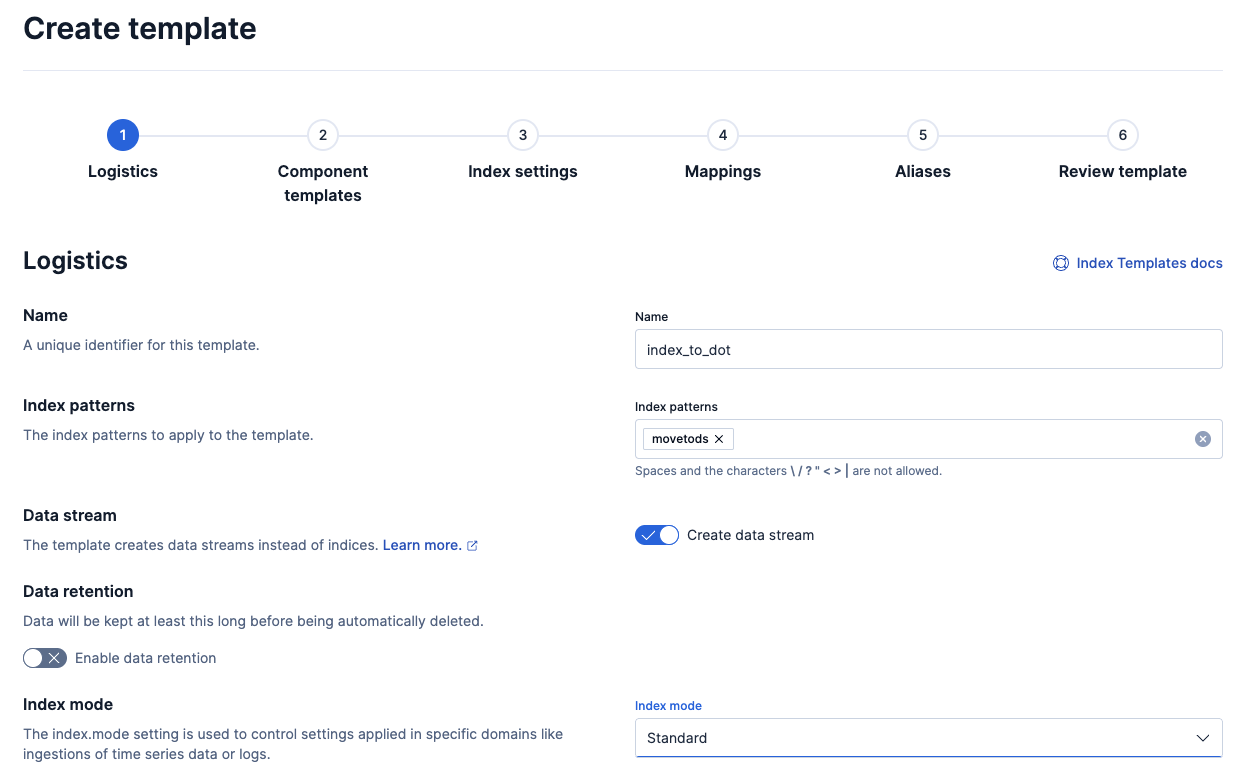

To create an index template in Kibana, complete these steps:

Go to Stack Management > Index Management. In the Index Templates tab, select Create template.

On the Logistics page:

- Specify the name of the template. For example

index_to_dot. - Specify a pattern to match the indices you want to manage with the lifecycle policy. For example,

movetodos. - Turn on the Create data streams toggle.

- Set the index mode to Standard.

- Specify the name of the template. For example

Optional: On the Component templates page, use the search and filter tools to select any component templates to include in the index template. The index template will inherit the settings, mappings, and aliases defined in the component templates and apply them to indices when they're created.

On the Index settings page, specify the lifecycle policy and ingest pipeline you want to use. For example,

indextodsandingest_time_1:{ "lifecycle": { "name": "indextods" }, "default_pipeline": "ingest_time_1" }On the Mappings page, customize the fields and data types used when documents are indexed into Elasticsearch. For example, select Load JSON and include these mappings:

{ "_source": { "excludes": [], "includes": [], "enabled": true }, "_routing": { "required": false }, "dynamic": true, "numeric_detection": false, "date_detection": true, "dynamic_date_formats": [ "strict_date_optional_time", "yyyy/MM/dd HH:mm:ss Z||yyyy/MM/dd Z" ] }On the Review page, confirm your selections. You can check your selected options, as well as both the format of the index template that will be created and the associated API request.

The newly created index template will be used for all new indices with names that match the specified pattern, and for each of these, the specified ILM policy will be applied.

For more information about configuring templates in Kibana, refer to Manage index templates.

Use the create index template API to create an index template that specifies the created ingest pipeline and lifecycle policy:

PUT _index_template/index_to_dot

{

"template": {

"settings": {

"index": {

"lifecycle": {

"name": "indextods"

},

"default_pipeline": "ingest_time_1"

}

},

"mappings": {

"_source": {

"excludes": [],

"includes": [],

"enabled": true

},

"_routing": {

"required": false

},

"dynamic": true,

"numeric_detection": false,

"date_detection": true,

"dynamic_date_formats": [

"strict_date_optional_time",

"yyyy/MM/dd HH:mm:ss Z||yyyy/MM/dd Z"

]

}

},

"index_patterns": [

"movetods"

],

"data_stream": {

"hidden": false,

"allow_custom_routing": false

}

}

Create a data stream using the _data_stream API:

PUT /_data_stream/movetods

You can view the lifecycle status of your data stream, including details about its associated ILM policy.

If you want to copy your documents from an existing index to the data stream you created, reindex with a data stream using the _reindex API:

POST /_reindex

{

"source": {

"index": "indextods"

},

"dest": {

"index": "movetods",

"op_type": "create"

}

}

For more information, check Reindex with a data stream.

If you use Elastic clients, scripts, or any other third party tool to ingest data to Elasticsearch, make sure you update these to use the created data stream.